Introduction

It should be no secret that I am trying to design a new programming language. What is probably less well-known is that I have been trying to do so, at least in some way, since late 2012.

Why is it taking me so long?

The short answer is that I am trying to save the world from a tech apocalypse.

The long answer explains why I think that and why I believe that I might succeed.

Software Crisis

Have you heard about the Software Crisis?

It is still a problem today. Software is still hard to deliver, especially on budget and on time. And even when those targets are hit, the cost is still prohibitive.

On top of that, when software is released, it is often buggy, often to the point that it is basically useless. Some bugs are okay, but not if they make the software impossible to use.

So, in essence, the software crisis is:

- The industry’s failure to deliver software,

- Or when it does, its failure to deliver on time,

- Or when it does, its failure to stay within budget,

- Or when it does, its failure to make the software good quality.

And #4 is the worst, because it enables the other tech crisis.

Cybersecurity Crisis

The Software Crisis is old news. Though it is still a big problem, that is not the biggest tech crisis today. That distinction belongs to something that is more dangerous, but that doesn’t even have an official name; I will call it the Cybersecurity Crisis.

Everyone can be breached, from little governments to big companies to big governments. And every person becomes collateral damage.

Tools

The video below explains the basics of the tools that programmers use to combat cyber crooks.

Did you notice that it also explained why cybersecurity is so hard? It gave one explicit reason, and three others were implied:

- On the Internet, there is no such thing as distance. Every bad guy can attack anyone.

- The bad guys include everyone from incapable script kiddies to ultra-capable nation states.

- Bad guys generally want information.

- Any information in the wrong hands is bad.

Implementations

If mathematics can make perfect locks, why is cybersecurity so hard? In fact, it should not even be a problem, right?

Unfortunately, even though the math can be perfect, that does not mean that the implementations of that math are perfect. Math must be implemented in programs, and almost all programs have bugs. Of course, bugs can be found and squashed, but doing that is just table stakes; it only stops unskilled crackers, not clever crooks or resource-heavy governments.

This is because even without bugs, implementations can “leak” information about the key (through timing, power usage, or a myriad of other ways), which can make the key easier to guess. Some leak a little, some a lot. And for many digital locks, even little leaks can make the key guessable with a significant chunk of computing power, which even smart individual crackers can bring to bear by building botnets.

There are programming techniques to deal with this, but as more techniques are spread, even more subtle leaks are found, including leaks in the hardware. Sometimes leaks can be plugged, though with consequences like loss of features or performance (as with Spectre and Meltdown, linked above), and sometimes they cannot (like KRACK).

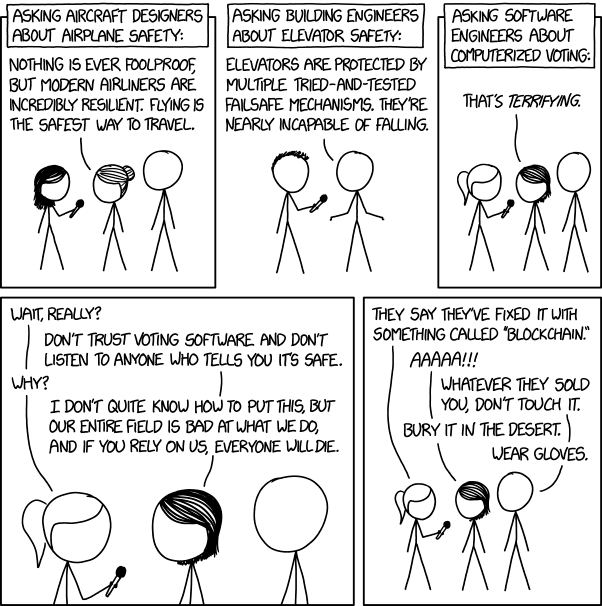

In other words, the software industry has no idea how to fix itself.

But at least the industry knows something is wrong.

Users

Of course, it is well-known that more often than not, users create cybersecurity problems themselves. Programmers cannot protect against that, so I will not talk about it.

Blame

Who or what is to blame for these crises?

There is no real answer, but I believe that it lies in the way that the software industry runs. Right now, the industry is focused on making money, no matter the cost to customers or anyone else, and that is not okay.

Before I go on, I need to make one thing clear: I support capitalism, not socialism, communism, or fascism; the latter three always lead to totalitarianism, famine, and suffering, and the former leads to abundance and freedom when it is working right.

But in this case, it is not working right.

Solution

As it turns out, the software industry is not the first to go through crises like this; engineering disciplines did as well. Those crises happened because people died from accidents caused by poor design.

Eventually, they had to add rigor and discipline to the practice. They did that through a rigorous certification process and through imposing tough discipline in the design process itself.

Now, you probably think there is not much similarity between software “engineering” and real engineering. I agree; it’s an insult to real professional engineers everywhere to label the work that programmers do as “engineering.” Engineers deserve the title, and programmers do not.

But software is similar to engineering projects in one crucial way: they can both be critical infrastructure.

The reason that poor designs had deadly consequences in the past was because it was infrastructure; everyone has to use it, whether they wanted to or not, and to protect those who had no choice, governments stepped in and mandated certification.

Well, that isn’t the case in software; programmers can create infrastructure without any certification. In fact, many have done it without any outside help. And yes, I know that the possibility for a lone wolf to write a lot a code is what has allowed the field to advance so far so fast. But that is still not acceptable when the potential for damage is so high. Plus, the rate of advancement has plummeted.

Thus, it is definitely time for software “engineering” to become a real, training-and-certification-required profession.

But before it can, there must be standards, for without strong, well-defined standards, any certification is unenforceable. Also, programmers need better tools. Thus, to make software safer, we need to:

- Create standards to define high quality software and the processes by which that quality is achieved and measured.

- Create a professional programmer certification to mandate and enforce those standards on critical projects.

- Create better tools.

Standards

The software industry has tried and failed before to write high quality software, but I still think that it can be done because there have been successes in writing critical software, the most notable of which is the software for the Space Shuttle.

To make good standards, a committee should be formed made of folks like the ones that built that software. Their job would be to create the processes used to judge accidents or cybersecurity breaches when such things happen, and they should start with their own processes, as well as processes that are used in engineering. (They could also study failures, like the Therac-25.) Then they iterate, asking hard questions of all of the standards. If a standard stands up to scrutiny, it would be adopted. Otherwise, it would not.

The iteration should also generate ideas for new standards, which could then be weighed and judged also.

Professional Certification

Once standards are set, laws should be made to enforce them, along with a set of certifications that would be required in order to lead commercial software projects.

Of course, I also like open source, and I don’t want it to die. But I think safety is more important. However, I also think that open source doesn’t have to die, the same way that engineering did not kill DIY projects.

This is how it would work: open source or personal projects would remain as-is, and even companies writing software for internal use could continue as normal; however, companies that plan to release software for commercial use by the public, even if not related directly to profits, must have a “Software Engineer of Record” (SER), who, just like real engineers, would be certified.

An SER would be equivalent to what project managers are now, except that they must be programmers too. And if the SER used open source code in the project, it must be vetted to the same level as all other code.

Also, not every programmer would need to be certified; only the ones that want to serve as SER’s, even if only for a subset of a project, would need to be.

Liability

One big question is how all of this will be enforced, especially the requirements for reviewing open source software. The answer is simple because it is the same way current engineering standards are enforced: liability.

At first, that seems like a bad idea, but remember that it has worked for engineering professions. It will work for software too.

As an example, when the Hyatt Regency walkway collapsed, the engineering firm, as well as the Engineer of Record and the licensed engineer who negligently approved bad design changes without checks, were all held liable.

Better Tools

But better standards, certifications, and processes, though required, were not the only things that engineering needed; it also needed better tools, which had to be designed around the new processes.

Programming needs better tools as well. As an example, the Space Shuttle used a new programming language developed especially for the project: HAL/S.

And since programming languages are, by far, the most important tools, I have set out to create one that is designed specifically for the processes that make software, one that will make it easier to write software without bugs.

However, good processes cost more in time and money than poor ones do, so having a good process would only exacerbate the Software Crisis. That is not my vision for a good language because I want to solve both crises.

Thus, what I mean for the language to be designed with the process in mind is that the language will enable the process and also reduce the cost of the process so that it is still feasible to use the process in the current software market.

To put it in more concrete terms, the purpose of the language should be to assure quality while minimizing the cost of development and quality assurance.

I believe that, not only can it be done, but one well-built, and extensible, language is all that is needed. In fact, I already have a design in mind.

Over the next while, I am going to write a series of posts detailing its design and why I think it will work.