I now (2021-09-29) consider this post to be harmful. Please read this post for why and for an update to my opinions.

Introduction

Most people don’t even think about dynamic linking. I do, a lot.

I hate it and want it to die.

Let’s talk about why.

All tests were done on an x86_64 machine running an up-to-date Gentoo Linux

5.10.10 with clang 11 under the amd64/17.1/desktop/gnome/systemd

profile.

Advantages of Dynamic Linking

The proponents of dynamic linking claim that it has four main advantages:

- Saving disk space.

- Saving RAM.

- No need to recompile every dependent when a dynamic library is updated.

- Dynamic addresses (ASLR) to mitigate ROP.

Saving Disk Space

The claim is that dynamic libraries save a lot of disk space because most of them are used by more than one dependent.

It turns out that for the vast majority of libraries, that is false. And to

add another witness, I ran my own test using libs.awk from that post.

$ find /usr/bin -type f -executable -print \

| xargs ldd 2>/dev/null \

| awk -f libs.awk > results.txt

$ find /usr/local/bin -type f -executable -print \

| xargs ldd 2>/dev/null \

| awk -f libs.awk >> results.txt

$ find /usr/sbin -type f -executable -print \

| xargs ldd 2>/dev/null \

| awk -f libs.awk >> results.txt

$ find /sbin -type f -executable -print \

| xargs ldd 2>/dev/null \

| awk -f libs.awk >> results.txt

$ find /bin -type f -executable -print \

| xargs ldd 2>/dev/null \

| awk -f libs.awk >> results.txt

$ cat results.txt | sort -rn > fresults.txt

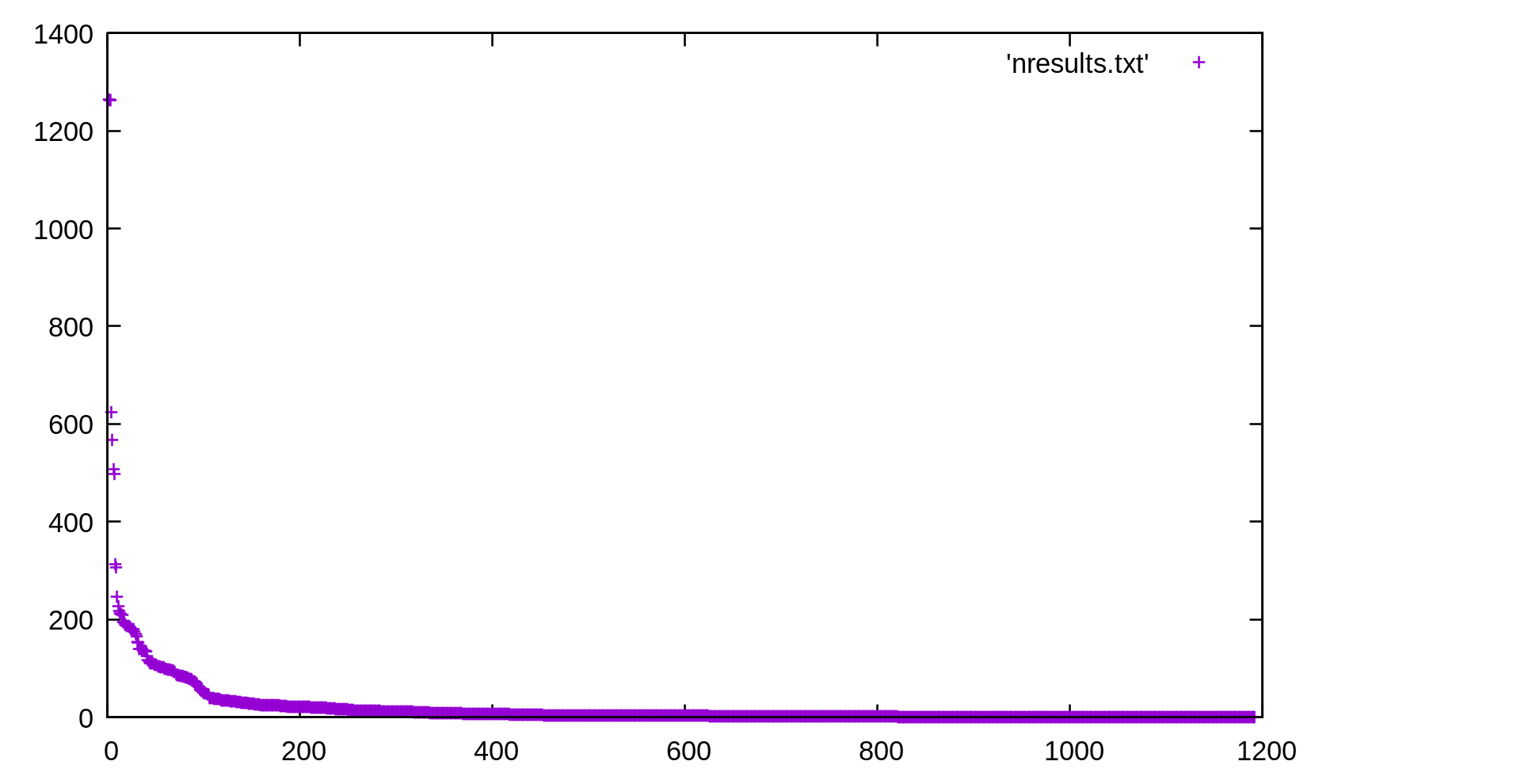

$ awk '{ print NR "\t" $1 }' < fresults.txt > nresults.txt

$ gnuplot

> plot 'nresults.txt'

Results are as expected.

However, there are a lot of users of a few libraries, and surely that will save space, right?

No. The same post has a section on how many symbols are used by each dependent of a library.

But I wanted to test it on my system.

First, I ran these commands:

$ go build nsyms.go

$ find /usr/bin -type f -executable -print \

| xargs -n1 ./nsyms > sresults.txt

$ find /usr/local/bin -type f -executable -print \

| xargs -n1 ./nsyms >> sresults.txt

$ find /usr/sbin -type f -executable -print \

| xargs -n1 ./nsyms >> sresults.txt

$ find /sbin -type f -executable -print \

| xargs -n1 ./nsyms >> sresults.txt

$ find /bin -type f -executable -print \

| xargs -n1 ./nsyms >> sresults.txt

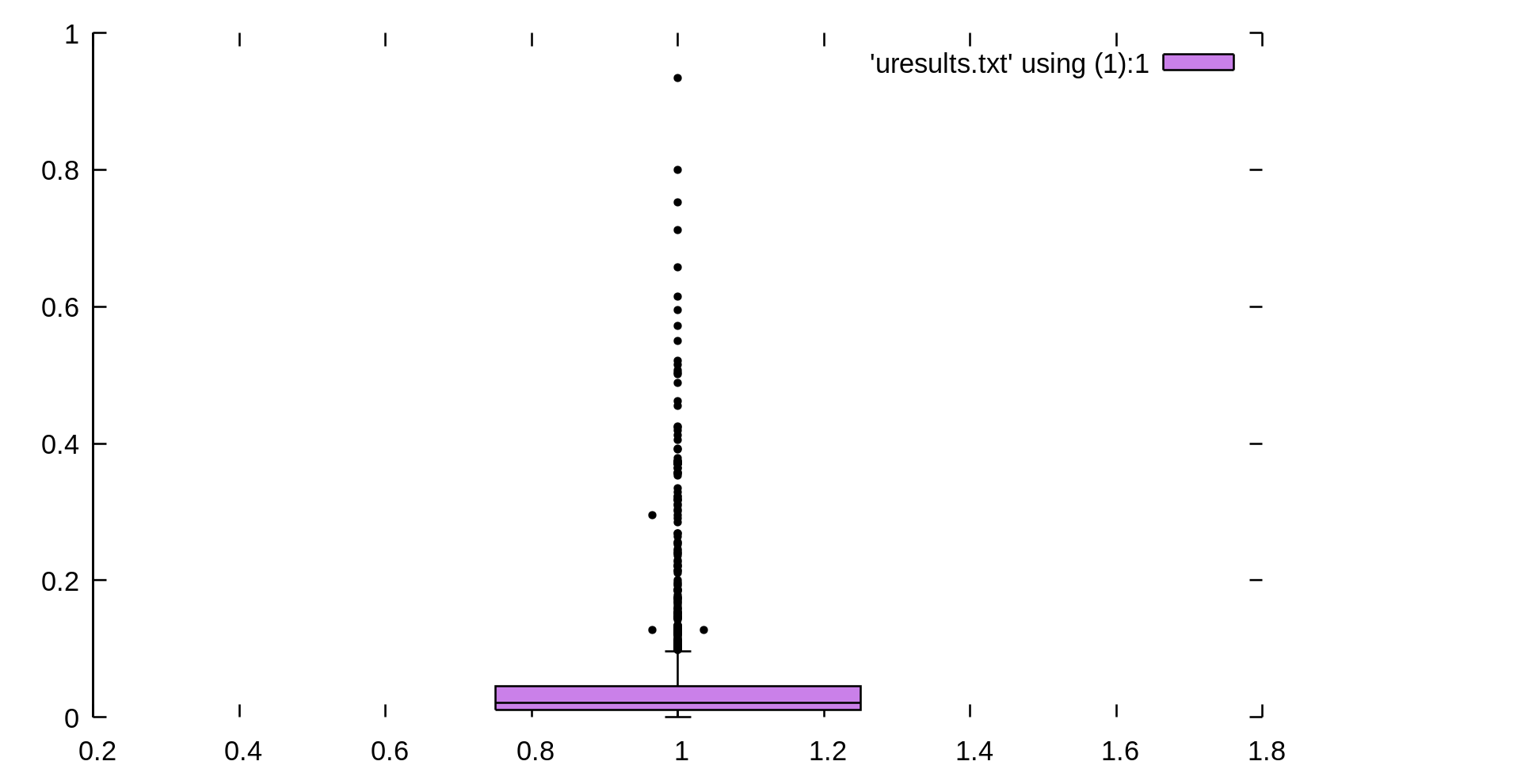

$ awk '{ print $4 }' < sresults.txt > uresults.txt

$ awk '{ n += $4 } END { print n / NR }' < sresults.txt

0.0493199

My average was 0.0493199.

Then, using the following commands with gnuplot:

$ gnuplot

gnuplot> set yrange [0:1]

gnuplot> set border 2

gnuplot> set style data boxplot

gnuplot> set boxwidth 0.5 absolute

gnuplot> set pointsize 0.5

gnuplot> set style fill solid 0.5 border -1

gnuplot> plot 'uresults.txt' using (1):1

I get the following graph:

So as it turns out, only for the most used libraries is it true that shared libraries take less space on disk. Other than that, because the entire library must be on disk if it’s a shared library, and only part if it’s statically linked, it would take an average of 21 dependents for a dynamic library to take less space on disk than just statically linking (assuming code was spread evenly across symbols).

Let’s use the numbers from the previous results to see how many libraries have 21 or more dependents:

$ awk '{ if ($2 >= 21) print $1 }' < nresults.txt | wc -l

211

On my system, only 211 libraries have that many dependents. There are 1192 libraries.

Yeah, it’s pretty safe to say that even if shared libraries are not worse than

static linking, it’s probably a wash, and the only reason it is that way is

because there are a few libraries that everything depends on, like libc.

In fact, the only way the argument makes sense is if it is made in context of having the static libraries on the system so that you can build software from source. I found using my Yc library at this commit that the shared library version was about 84% of the size of the PIC static version.

But then again, who really builds software on their own machine?

Besides crazies like me, of course.

Saving RAM

The argument that shared libraries save RAM is almost as ridiculous as the argument that they save disk space.

Here’s why: the OS may reserve mappings for memory pages that contain the executable, but it won’t map them until they are needed. This is because most commands don’t use all of their features in every run, so they do not need all of their code.

That, combined with the fact that most library users use an average of 5% of the symbols, means that static libraries can be smaller in RAM, especially if you take into account the code bloat that comes from the extra indirection needed for shared libraries.

Yes, shared libraries don’t need to be completely mapped in either, but how easy is it to dynamically link, at startup, an executable to a library that you have only part of in memory? I don’t know how OS’s do it, but I would suspect one or two map the entire shared library into the address space.

No Need to Recompile Dependents on Update

This is probably the most compelling argument on the surface. I understand that

no one wants to recompile EVERYTHING when libc is updated. I get it.

But the desire is flat-out wrong, for one HUGE reason: DLL Hell, the name for the problem when a shared library is updated in a way that breaks a program.

“But that rarely happens!” I hear the hecklers insisting.

Maybe. But when it does, it can do everything from crash programs randomly to introduce security vulnerabilities.

I don’t know about you, but I find it unacceptable that my machine could have hidden vulnerabilities everywhere. It’s one thing if a vulnerability is caused by bad software that I can audit. It’s a completely separate thing if updating a library introduces vulnerabilities that no source code audit will ever show me!

Oh, and by the way, the breakages could be from API changes or ABI changes. Or probably from other factors that we haven’t even figured out yet.

In other words, to be sure that a shared library update does not break your tools in obvious or subtle ways, you need to recompile all of its dependents anyway. This is especially true in the presence of versioned shared libraries.

This is one of the reasons I run Gentoo and also why I would like to switch to a

completely statically-linked profile, using musl.

Support for ASLR

To be fair to the proponents of dynamic linking, this argument seems to be brought up less, probably because with static PIE, this argument doesn’t apply anymore.

I thought I would mention that, just in case.

Disadvantages

Now that we have looked at the advantages of dynamic linking, and either shredded them or rendered them moot, let’s look at the disadvantages.

- Reproducible Builds.

- Binaries cannot be transferred.

- Complexity.

- Slower startup time.

constdata can be writeable.- Existence of

ldd

No Reproducible Builds

This one is, by far, the BIGGEST reason to use static libraries. It’s so important, I put a link in the section header.

I have learned this because I am starting the process of designing a build system.

Yes, I know (language warning). Don’t @me.

This build system is because I need a cross-platform one, and CMake doesn’t fulfill my needs. I mean to use it only on my own projects.

I think reproducible builds are something to build into the design from the start, even if it is not possible to support them in the first version, so I have done a lot of thinking about what they would require.

This is a small list of what they require:

- Exact same config

- used to do a clean build

- with the exact same compiler(s)

- which means the compiler(s) need(s) a reproducible build too.

To get the exact same compiler, you would need to make sure that you can bundle its required shared libraries with it and shim them in when running it on another machine. Good luck with that, especially since you may actually need to bundle in the dynamic linker too!

With a statically-linked compiler? Easy! The only requirement for it is that the OS version supports the exact same syscalls that the compiler needs. Just bundle the compiler, and one major obstacle to reproducible builds is gone.

In fact, I think I would go so far as to say that reproducible builds are nigh impossible, if not actually impossible, in the presence of dynamic linking.

And if I cannot convince you of the utility of reproducible builds, then I can’t convince you of anything.

Binaries Cannot Be Transferred

With a statically-linked binary, you can copy it to another machine with an OS that supports all of the needed syscalls, and it will just run. Period.

If you want to do that with a dynamically linked executable, you have to do exactly what I mentioned above. In fact, this fact about dynamically-linked binaries is why they are such an obstacle to reproducible builds.

And default statically-linked binaries is one of the reasons the Go language became popular.

Complexity

Have you really thought about how much complexity there is behind dynamic linking?

First, read this blog post series on linkers.

Then realize that the dynamic linker itself has to be statically-linked because if it isn’t, who will link the linker?

Then realize that the operating system is basically executing code you don’t control when you run a program you wrote.

Then realize that all of this actually makes the operating system, the most important thing, much more complicated!

Then remember that all of that complexity happens at startup time.

Slower Startup Time

I think this link shows it very well, a 2x difference of 70 microseconds.

Does that matter? Well, if you run a lot of small programs, yes, it does. And you do every time you run a shell script.

For interactivity purposes, it doesn’t really matter, though.

Why would static executables be faster? Shouldn’t there be less to load with dynamic linking?

Yes, but that ignores a big point: getting more stuff off disk when you are already getting stuff off disk is cheap. Getting stuff off disk when you were not already doing that is expensive, but tacking on more when you are is cheap.

const Data Can Be Writeable

It turns out that the dynamic linker will sometimes map segments containing

const data into writeable memory. There are so many problems with that.

But it never happens with static linking.

Existence of ldd

It turns out that ldd can be manipulated into arbitrary code execution.

Neutral Traits

Some neutral traits, ones that I believe give no advantage to either dynamic linking or static linking are:

- Performance

Performance

While the performance of a statically-linked program will (probably) be better than its dynamically-linked equivalent, due to better optimization opportunities and less overhead on function calls, the system as a whole might have better performance with dynamically linked libraries that are shared across processes.

Then again, we saw above that the sharing is not really high…

Conclusion

I might add to this post later as I think of more disadvantages of dynamic linking, but I think that is enough for now.

In short, dynamic linking breeds complexity, insecurity, and prevents us from correctly auditing software and building it with reproducible builds.

It needs to die.